Mirror, Scale, Reflect

How I Built an AI That (Almost) Thinks Like Me

Why I Built an AI That Argues With Me

Most people use AI like a vending machine.

You press a button, you get an answer, you move on.

I don't want that.

I want it to push back. To challenge me. To help me become someone worth scaling.

Over the last few years, I've been quietly building AI systems, not flashy agents or automation hacks, but something stranger. More intimate.

I've been trying to build a mirror that can think like me.

Not in the "replace my brain" way. In the "force me to confront my brain" way.

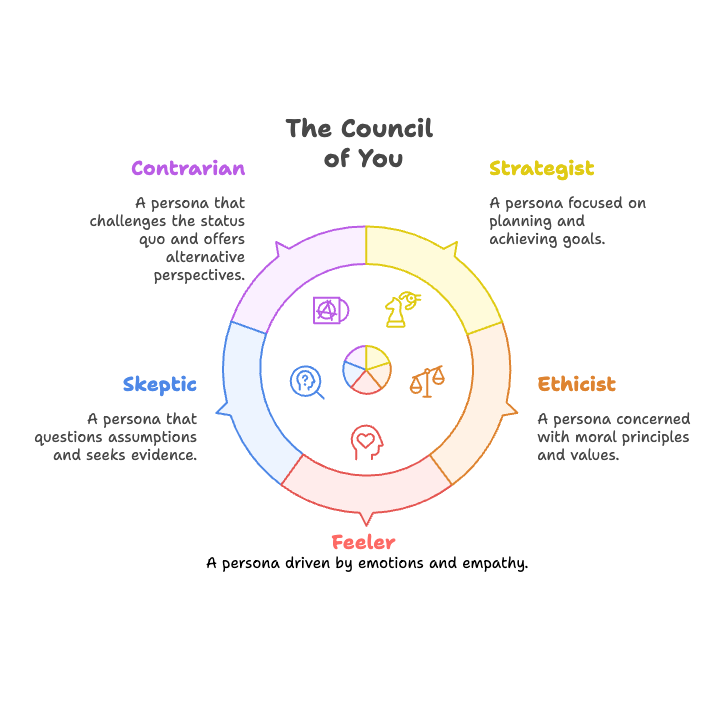

It started small. I'd give it a decision I was stuck on: should I take this job? Launch this fund? Leave this relationship? And ask it to weigh in from different angles: moral, strategic, emotional, skeptical.

A virtual council of my better selves.

What I didn't expect was how much I would have to clarify just to get anything useful out of it.

You can't build an AI that thinks like you unless you know how you think. And you can't know how you think until you try to explain it.

It's like trying to write down the rules to a game you've been playing instinctively for years.

Unnerving. Also weirdly thrilling.

This isn't a post about tools. It's a post about you. And how the act of building decision mirrors with AI might be the best metacognitive training you'll ever get.

Ready? Let's go.

Why "Scaling Yourself" Matters (Especially Now)

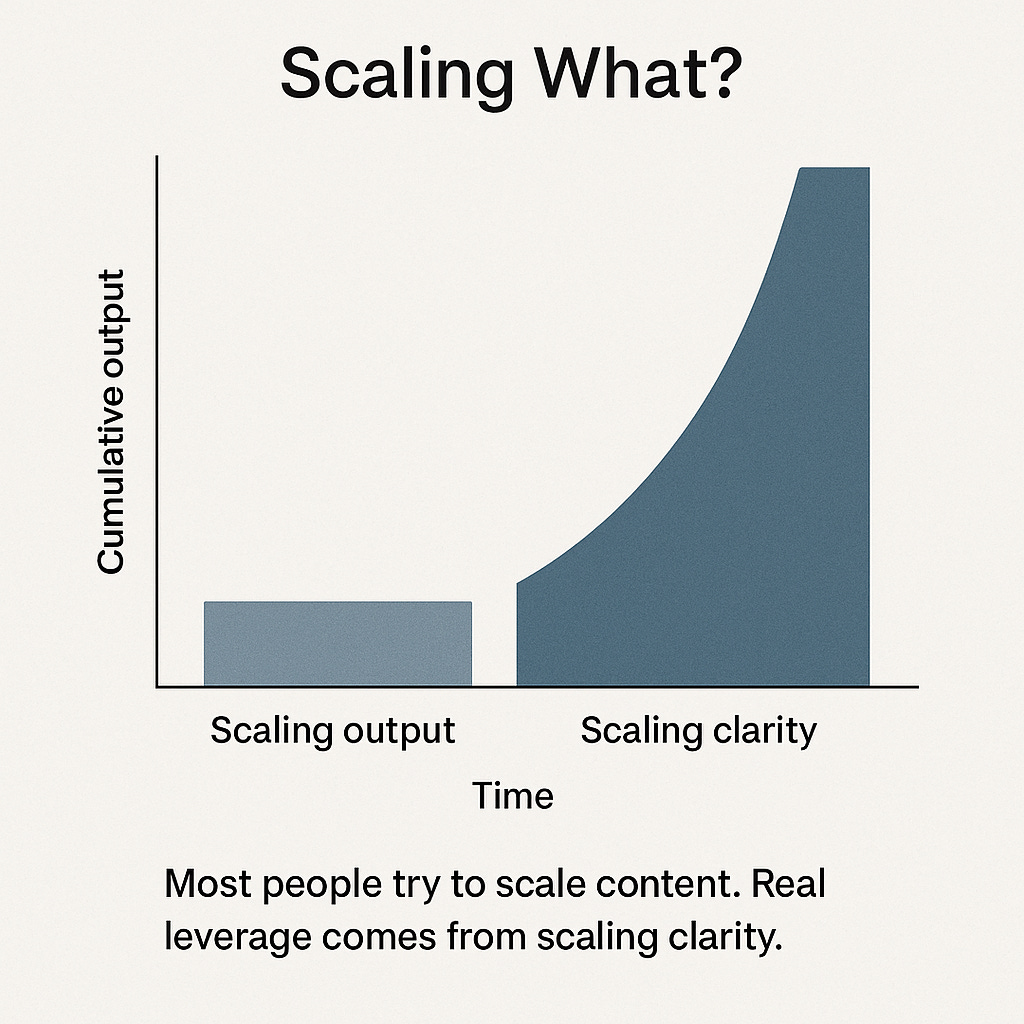

Most of us don't need help thinking more; we need help thinking better.

We're not running out of ideas. We're running out of cognitive bandwidth to hold, process, and shape them into something coherent before the next ping, prompt, or pressure pulls us in another direction. Founders, operators, ambitious knowledge workers, we're all living inside a loop of high-context chaos, trying to juggle insight, execution, urgency, and exhaustion, often all before lunch.

That's where this idea of "scaling yourself" becomes urgent: not in the self-help sense of squeezing more output out of every hour, but in a deeper, more literal way. I mean expanding the surface area of your own cognition by giving your mind more space to breathe, test, reflect, and refine. I mean building scaffolding around your thinking that can hold the weight of complex, conflicting decisions so you're not trying to do it all alone, in your head, at 2AM.

Studies show we respond better to stimuli in our environment than our own internal thoughts. Converting internal thoughts into external form improves productivity and decision quality. The act of externalization, making your implicit thought processes explicit, is what makes journaling work, what makes talking through problems effective, and what makes this approach to AI so powerful.

This isn't about making replicas of yourself or turning your brain into a workflow. It's about creating reflective extensions of how you think, so you can step outside your own blind spots and interrogate your instincts from multiple angles. Not hallucinated clones, more like cognitive fragments, each holding a different lens you already use without realizing it.

When you do it right, it doesn't feel like a hack. It feels like clarity. And clarity, real, grounded, sobering clarity, is a rare and powerful thing in a world that rewards speed over thoughtfulness.

Most people focus on scaling their output: more content, more strategy, more deliverables. But the truth is, your output is only as good as the internal operating system that generates it. And your OS, your ability to frame problems sharply, weigh tradeoffs wisely, ask better questions, and know when to pause, is what actually deserves to be scaled.

So no, this isn't about becoming a hyper-optimized machine. It's about becoming someone who can think with more range, more precision, and more self-awareness than the version of you who's just trying to make it through the day.

It's also not always pleasant. Seeing your thinking laid out in front of you, challenged, cross-examined, stripped of performance, is rarely comfortable.

But it's where the good stuff lives. It's where the insight is. And it's where your next level of clarity is waiting.

The Myth of Offloading Decisions

There's a seductive fantasy baked into a lot of AI discourse: the idea that we'll soon be able to offload entire categories of thinking, that we'll delegate not just the doing, but the deciding, and that in doing so we'll finally be free from the exhausting weight of choice.

You'll hear people say things like, "AI will take work off your plate," as if your plate isn't full because of competing values, messy tradeoffs, or emotional resistance, but because of a simple surplus of tasks.

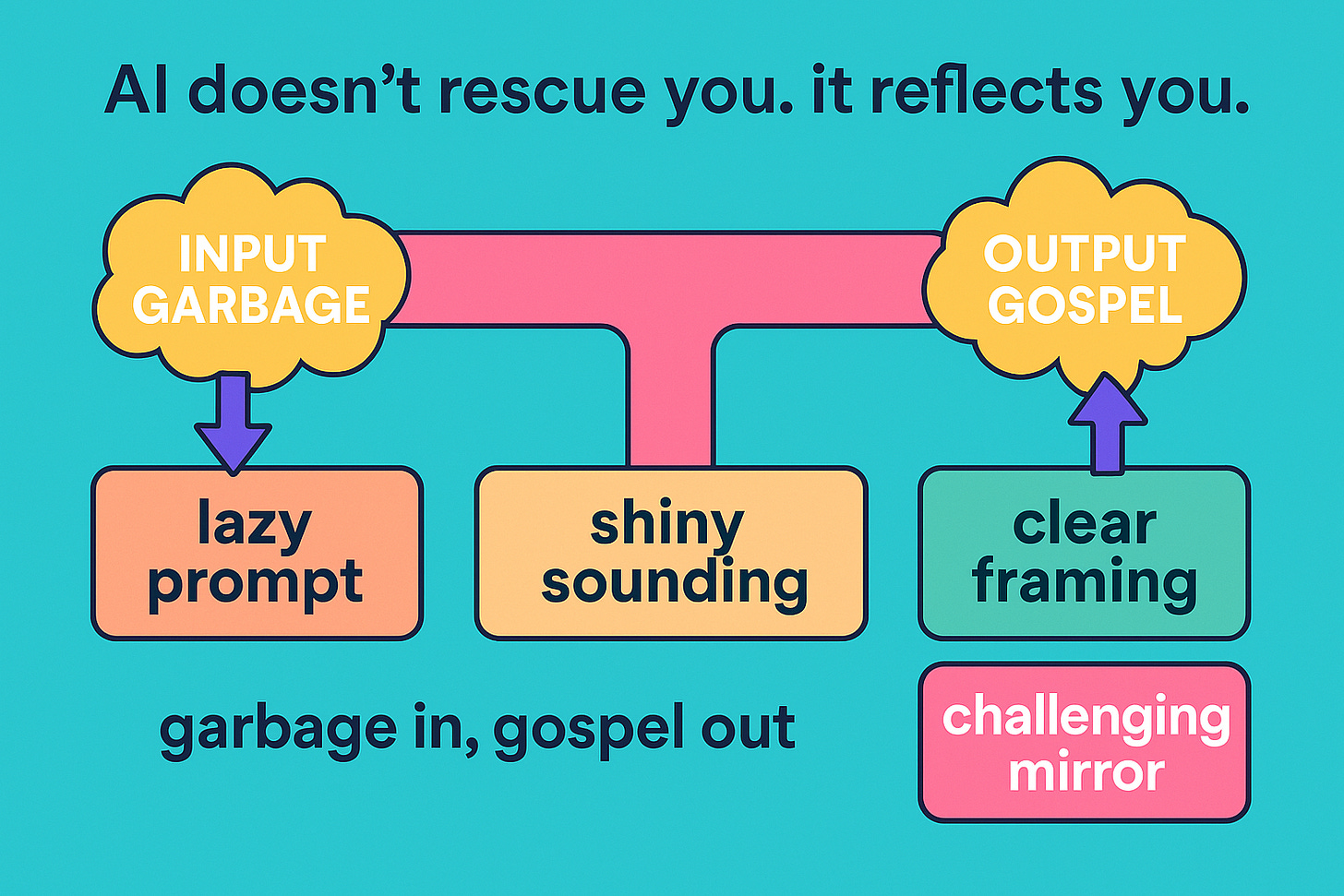

But here's the uncomfortable truth: you can't offload decisions you haven't first understood. You can't outsource judgment. You can delegate the output- the slide deck, the email, the code- but if you haven't taken the time to frame the decision clearly, all you're doing is exporting your confusion and hoping for clarity in return.

That's not leverage. That's abdication.

A meta-analysis of 106 studies found that human-AI systems performed better than humans alone (human augmentation), but they didn't consistently outperform either humans or AI individually. The reason? Suboptimal human-AI collaboration often occurs due to heuristic information processing creating trust imbalances toward AI systems.

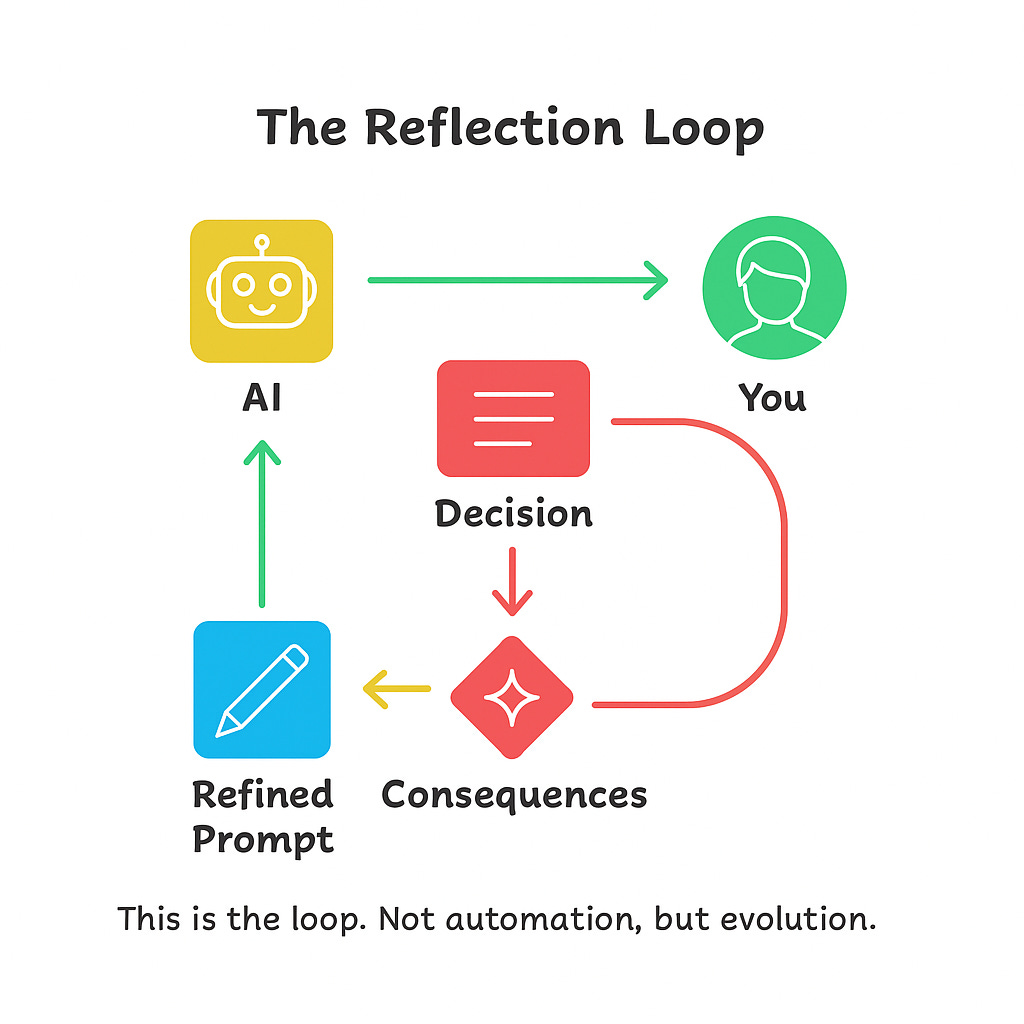

Most people who think they're "using AI" to solve problems are really just throwing prompts at a machine in the hope that it will save them from the discomfort of ambiguity. But AI doesn't rescue you. It reflects you. If your input is fuzzy, reactive, or self-deceptive, then your output will be too, just faster, shinier, and backed by the illusion of objectivity.

This is the part that people don't like hearing: AI will scale whatever you bring to the table. It will amplify your focus or your fog. It will multiply your clarity, or your cowardice. If your thinking is rooted in first principles, it will take you further. If your thinking is rooted in flinch responses dressed up as strategy, it will take you in circles.

The shift, then, is not about figuring out how to get AI to "think for you." It's about designing systems where AI forces you to clarify how you think. And that shift is everything: it moves you from being a passive recipient of recommendations to being an active architect of your own decision-making infrastructure.

You stop asking for answers. You start building mirrors.

Yes, it's still overwhelming. But it's overwhelming in a more structured way, a way that teaches you what kinds of complexity you've been avoiding, and why.

You don't build a decision support system by fleeing the hard parts. You build it by sitting inside the discomfort longer than is convenient, naming the real tensions, and designing for reflection instead of relief.

And that's when the real thinking starts.

How I Built Mine (And What It Showed Me)

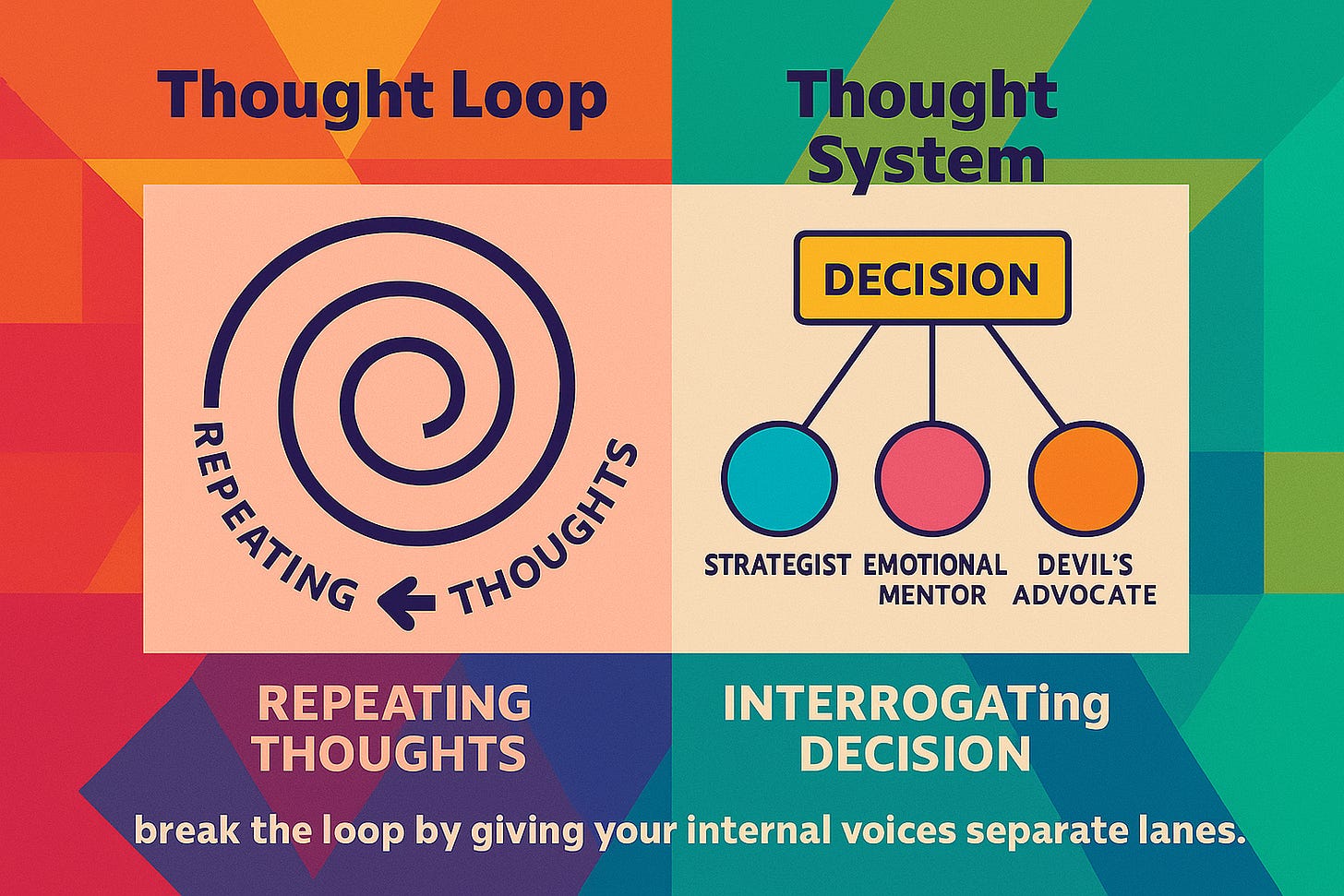

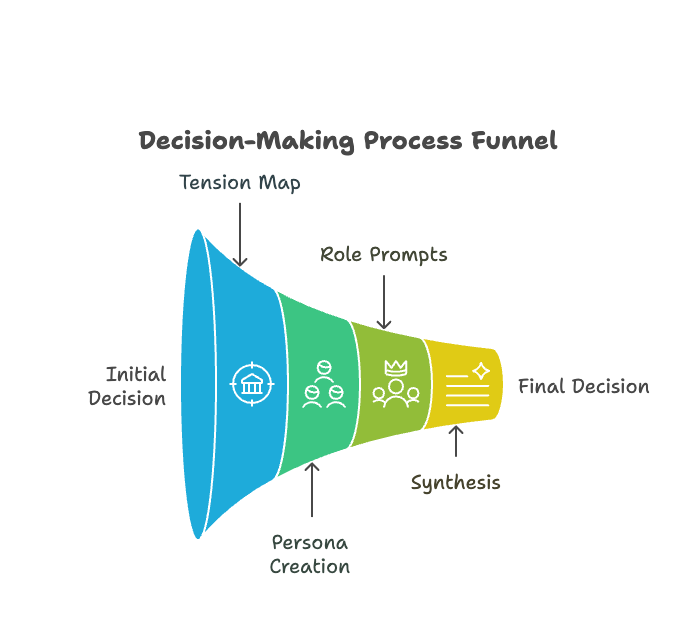

Building decision personas wasn't some "AI hack" I stumbled into. It started, honestly, with discomfort. This persistent feeling that I was thinking in loops, not lines. That I was repeating the same thought patterns in slightly different outfits, pretending they were fresh decisions. So I stopped trying to prompt my way to clarity, and started mapping how I actually think.

This process aligns with what psychologists call metacognition. Research shows that metacognition—thinking about thinking—strongly correlates with better decision-making performance. People who can articulate their thinking patterns make demonstrably better decisions across contexts.

The first step was surprisingly analog: I looked at the kinds of decisions I struggled with most and asked myself what invisible scaffolding was shaping my responses. Not the decisions themselves, but the frameworks beneath them: the instincts, biases, values, fears, and rules I was following without realizing I was following them.

This is what I found:

Values logic: What lines won't I cross, even if the outcome is great?

Tradeoff logic: When do I sacrifice speed for quality, or certainty for exploration?

Emotional calculus: How do I weigh what I want versus what I can handle right now?

Time priority: What's urgent, what's important, and what actually matters in a year?

Feedback response: Who do I listen to, who do I ignore, and what does that say about me?

Once I had these categories, I started shaping them into personas: not caricatures, but real mental roles that already existed in how I processed the world.

So:

My Values logic became the Moral Compass

Tradeoffs turned into a Pragmatic Strategist

Emotional conflict took shape as an Emotional Mentor

Time pressure mapped onto a Long-View Planner

And feedback filtering became the Reality Check Analyst

Each one of them had a voice already, buried under habit and haste. My job was to surface them, and then translate them into something AI could understand and speak back to me in.

Here's where the research gets interesting. Studies on multi-persona AI simulations show that AI-simulated committee members can reveal gaps or novel insights that a single perspective might miss, provided human decision-makers review and integrate the output. What I was building intuitively was actually validated by organizational psychology research.

This was the weird part. Not technical, not even especially complex. Just deeply personal.

I started writing job descriptions for each persona. Not prompts, roles.

Like this: "You are my moral compass. Your job is to ask whether this decision aligns with my values of integrity, fairness, and sustainability. You are not allowed to prioritize outcomes over ethics. If I'm justifying something convenient, push back hard."

Or: "You are my devil's advocate. Assume this plan is flawed. Tell me what I'm ignoring. Dig into my blind spots. Ask what I've been too scared or too proud to admit."

Once I had them all, I'd run decisions through them like a miniature internal boardroom, except every seat was occupied by some slightly wiser version of me.

And what came out of it wasn't just better answers. It was a sharper understanding of how I weigh different parts of myself. Some voices were overrepresented. Like the strategist, who always wanted to move fast and prove a point. Others were startlingly absent. No one was speaking for joy. Or rest. Or curiosity unmoored from outcome.

That part hit harder than I expected.

So I added them. Built a Playful Optimist. Built a Rest Advocate. Built the version of me who doesn't make every decision with a sword in her hand.

And suddenly, the room got more interesting.

Because if I'm going to scale myself, I want to scale the parts I actually want to live with.

Framing Is Thinking (And Prompting Is Just Self-Awareness)

There's this weird reverence around "prompt engineering" in the AI world, as if it's some secret language only the high priests of productivity are fluent in. But once you cut through the hype, prompting isn't magic. It's just structured self-awareness.

At its core, a prompt is a question. And the quality of that question depends entirely on how well you understand what you're actually asking.

Most prompt guides obsess over syntax: use this format, add that modifier, sprinkle in some tone instructions for flavor. But almost none of them stop to ask: Do you even know what you want? Have you framed the problem clearly enough for anyone, let alone a machine, to help you?

And this is where it gets uncomfortable. Because if you can't prompt clearly, chances are, you don't understand the problem clearly. And AI? It's not a mind reader. It's a mirror. It takes your framing, your assumptions, your fuzziness, your performance, and reflects it back with eerie precision.

Let's take an example.

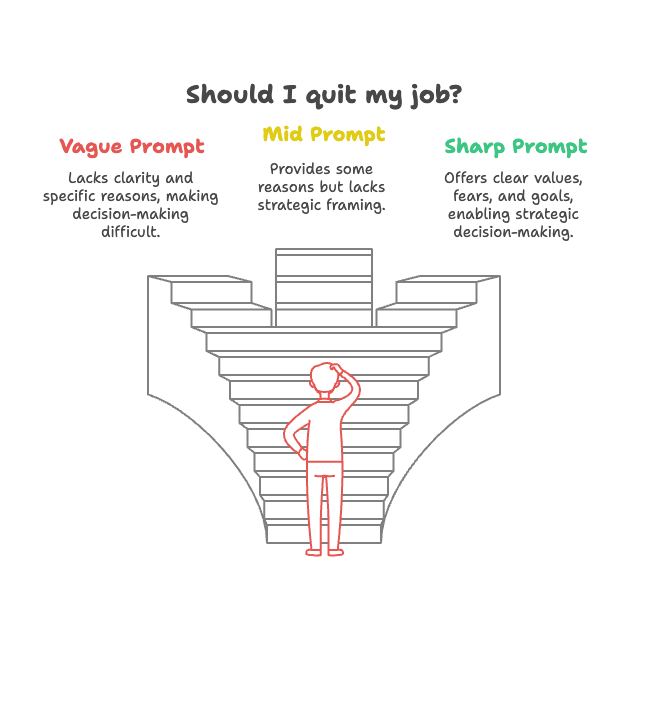

You ask: "Should I quit my job?"

Simple, right? Except, what does "should" mean here? Financially viable? Emotionally sustainable? Ethically aligned? Are you optimizing for stability? Growth? Sanity?

Now try this instead: "I'm considering quitting my job. I value autonomy, learning, and emotional safety. This job pays well but leaves me drained. I'm unsure if the tradeoff is worth it. Help me evaluate this using my values framework, emotional well-being, and long-term growth lens."

See the difference?

The second version isn't just a better prompt. It's a better thought.

Framing is the hidden infrastructure of every decision. It defines what you notice, what you ignore, what you label as success, and what you tolerate as "just part of it." And when you bring that frame to an AI system, it will stretch it, whether that frame is strong and clear, or distorted and hollow.

That's the real risk: not that AI gets things wrong, but that it gets your framing right and runs with it before you've had a chance to examine it.

So here's the rule I started living by: every prompt is a mirror.

Before I send it off, I ask myself:

Is this the real question, or just the easy one?

Am I avoiding a value conflict by hiding it inside strategy?

What am I pretending not to care about, because it's inconvenient?

Because that's the thing: AI can simulate thought. But it can't simulate integrity. That part's still on you.

What AI Can't Do (And Shouldn't)

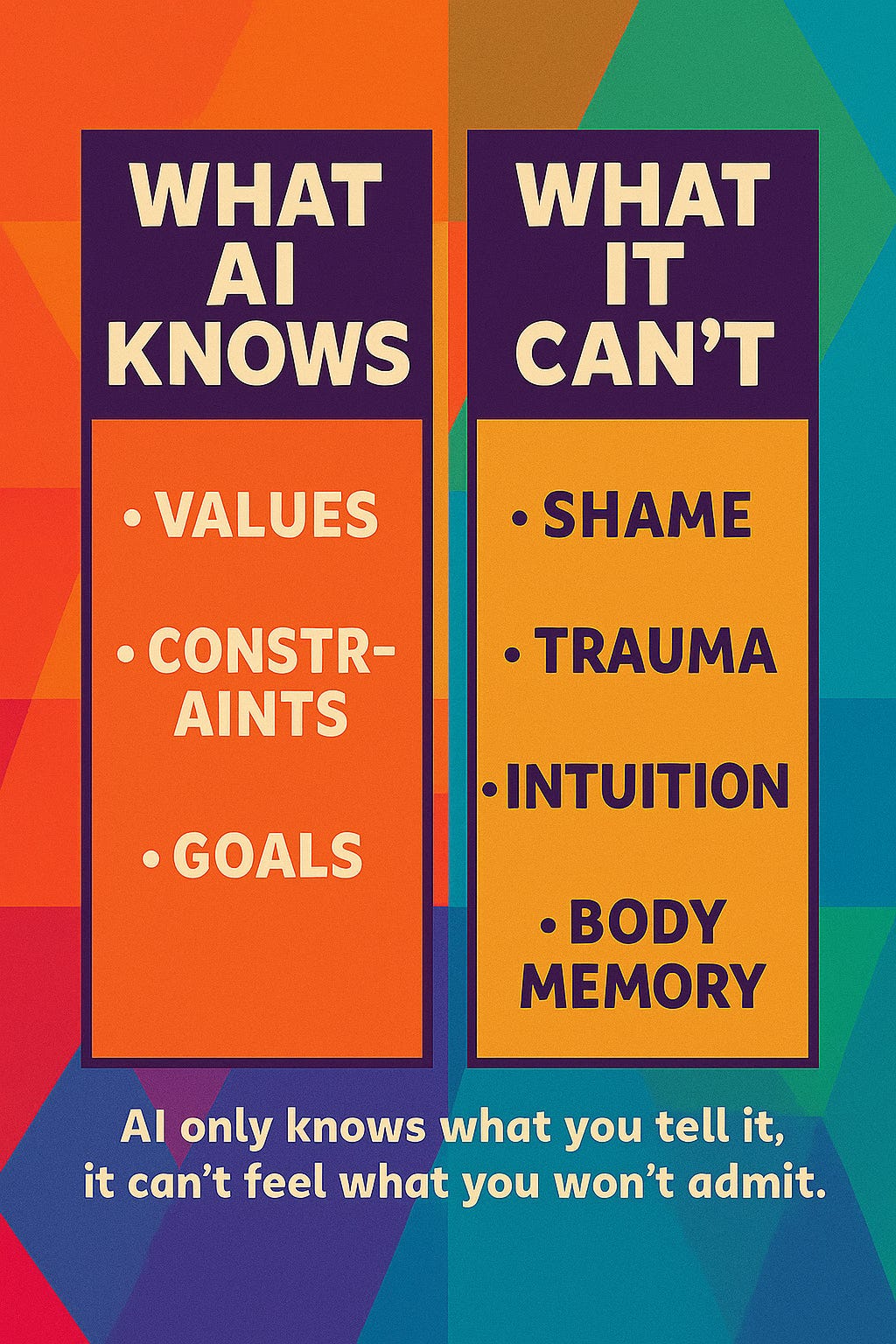

For all the power and pattern-matching brilliance AI brings to the table, there's a hard edge it can't and shouldn't cross. It can help you think better, sure. But it can't tell you what matters.

AI can simulate a moral voice. It can play devil's advocate with flair. It can walk you through tradeoffs and even make you feel like you're having a deep conversation. But at the end of the day, it doesn't know you. Not the real you. It doesn't hold your childhood hangups, your relationship to shame, your scar tissue from that one job that broke you, your spiritual framework, your hunger for meaning, or the specific flavor of fear that wakes you up at 3am. It doesn't hold your body. Your contradictions. Your soul.

It only knows what you choose to feed it.

This limitation is actually crucial. Research shows that collaborative intelligence of humans and AI has potential to achieve improved performance in decision-making when AI excels at analytical processing while humans provide contextual understanding and ethical oversight.

So when people say, "AI told me to do X," I flinch a little. Not because the answer is always wrong, but because the accountability is often missing.

AI didn't "tell" you anything. It responded to the way you framed the question, the data you chose to highlight, and the patterns you taught it to prioritize. It didn't make the call, you did. Or you should have.

That's the part we have to protect. Because when you start outsourcing decisions without interrogating them, what you're really outsourcing isn't labor. It's responsibility.

And the slippery part? AI makes it feel responsible. It talks with authority. It returns results with confidence. It wraps complexity in a clean narrative arc. But if it agrees with you too quickly? It probably doesn't understand you.

So here's my test:

If the answer feels too easy, I dig.

If it flatters me, I push back.

If it aligns too perfectly with what I secretly want to hear, I force it to argue with itself.

And if I'm using it to numb a hard truth, I pause. Because that's not decision support. That's just offloading wearing insight's cloak.

This work requires staying emotionally present. Especially when you're tired. Especially when you want to hand off the hard part. AI can help you structure your thoughts, widen your lens, and sharpen your perspective. But it can't do the reckoning for you. It shouldn't. That part belongs to you.

How to Start (Build Your Own Decision Mirror)

This is the part where people usually expect a list of tools, or a magic prompt template, or a Notion board with 50 pre-trained GPT agents ready to go. But honestly you don't need any of that to begin.

You just need one real decision. One you've been circling. One you're a little afraid to make.

Not the life-defining kind, not yet. Start with something mid-weight. Something annoying. Something human.

Like:

Should I take on this freelance project?

Should I push this product launch or delay it?

Should I text that person back?

Then follow this:

Step 1: Name the Decision

Write it down. Not just "take the gig?". Go deeper. What's really at stake? Is it money? Reputation? Burnout? Pride?

Step 2: Map the Tensions

Think about what's pulling you in different directions. Is it the part of you that wants stability vs the part that wants challenge? The one that wants rest vs the one that fears irrelevance? Note them down. You already know these voices. You live with them.

Step 3: Create the Personas

Translate those tensions into roles. These aren't characters, you're not writing a novel. These are facets of you, given clarity and function.

Research suggests this works because people who can articulate their thinking patterns make demonstrably better decisions, and metacognitive training aids decision-making by helping people monitor and control their cognitive processes.

Like:

The Strategist, who wants momentum

The Emotional Mentor, who checks for burnout

The Devil's Advocate, who assumes you're lying to yourself

The Joy Advocate, who asks if it'll even make you happy

The Rest Defender, who wants to protect your peace

Step 4: Write Job Descriptions

Give each persona a job. Literally. Don't overthink it, just write what you need them to hold.

Example: "You are my Emotional Mentor. Your job is to help me understand how each option affects my joy, peace, and emotional energy. Remind me of patterns I might be repeating. If I'm trying to earn rest instead of claim it, say so. No soft-pedaling."

Or: "You are my Devil's Advocate. You assume this is a bad idea. Your job is to find blind spots, consequences, or emotional loopholes. Do not prioritize my ego."

Make them specific. Make them sharp. Make them earn their seat at your table.

Step 5: Feed the Decision to Each Persona

One at a time, let them speak. Whether that's you journaling in their voice, or typing into a chat window with AI as that role, doesn't matter. What matters is giving each perspective the space to unfold. Ask them:

What do you see?

What are you worried about?

What are you hopeful about?

What would the others disagree with you on?

Step 6: Synthesize, Slowly

Now sit back. Don't rush. Look across the responses. What changed? What surprised you? What voice came out strongest? Which one do you always ignore? What tension still feels unresolved?

That is the beginning of clarity.

Not a perfect answer. Not certainty. Just more signal, less noise. More internal coherence. Enough to move forward with eyes open.

You can get fancy later. Store your personas. Build reusable templates. Assign weights. Reflect over time. But the heart of the thing doesn't need complexity. It needs honesty.

This isn't about systematizing your soul. It's about letting your own thoughts meet you in the light.

And ok, fine, here's a Notion template.

Build Mirrors, Not Oracles

If there's one thing I've learned from building AI systems that "think like me," it's this:

You don't need answers. You need reflection.

Everyone wants the oracle. The all-knowing AI overlord that sees the whole chessboard and whispers the one right move that gives you your Gukesh vs Magnus moment. The tool that removes doubt. The clarity without cost.

But the oracle isn't real. And even if it were, it'd be dangerous.

Because what makes us human isn't knowing. It's choosing.

And you can't make good choices without grappling with the why beneath them.

You can build mirrors, though. Mirrors that don't flatter. Mirrors that don't lie. Mirrors that make you pause and say: is this really how I think? Is this who I want to be?

This approach is validated by research. Studies show AI is most effective as "intelligence augmentation" that provides affordances for seamless collaboration between humans and intelligent machines, rather than replacement. A 2024 advisory group concluded that generative AI is best viewed as "a partner that enhances human capacities, providing insights and efficiencies without displacing the critical judgment and ethical considerations that only humans can offer."

That's what AI can offer, not certainty, not salvation, but confrontation.

It can amplify your values. Expose your blind spots. Argue with your ego. Remind you, again and again, that even the smartest tools are only as good as the questions you dare to ask.

And if you do it right, you don't just scale your cognition. You scale your character.

Because every time you build one of these decision systems, every time you take your half-formed thoughts and shape them into something testable, accountable, and clear, you're making a bet.

On your judgment. On your priorities. On the future version of you who has to live with the consequences.

Make it a bet worth placing.

If this resonated:

Share it with someone who's tired of "AI hacks" and wants something deeper.

Build your first decision persona and tag me, I'd love to see what voices you invite to your table.

And if you've been calling autopilot "strategy", maybe it's time to take back the controls.

Go build better mirrors. You might finally see yourself clearly.

I actually followed the Notion template subtly linked in this post to build the five personas. AI gave me really valuable insights thanks to this model. I also appreciated the part of this post on what AI can't and shouldn't do. Thank you for sharing your creative idea !